My query just got faster - brief introduction to 12.2 in-memory cursor duration temp tables

Image courtesy of wikipedia |

This post covers one of the new SQL performance enhancements that we incorporated into Database 12c Release 2. All of these enhancements are completely automatic, i.e. transparent to the calling app/developer code/query. These features are enabled by default because who doesn’t want their queries running faster with zero code changes?

So in this post I am going to focus on the new In-Memory “cursor duration” temporary table feature. Let’s start by looking at cursor duration temp tables…

Above image courtesy of wikimedia.org

What is a cursor duration temp table?

This is a feature that has been around for quite a long time. Cursor duration temporary tables (CDTs) are used to materialize intermediate results of a query to improve performance or to support complex multi step query execution. The following types of queries commonly use cursor duration temp tables:- WITH Clause and parallel recursive WITH

- Grouping Sets

- Star Transformation

- Frequent Item Set Counting

- XLATE

So what is an In-Memory cursor duration temp table?

An In-Memory cursor duration temp table (IMCDTt) is simply where the data for a CDTs is stored in memory, which results in a “significant” performance boost for queries that make multiple passes over a data set or need to materialise intermediate results. The switch to using in-memory tables means that your queries should see a reduction in I/O since each pass-over the data set does not incur additional I/O operations to access that data.There maybe times when there is insufficient memory available to load all the data, so what happens in these situations? When this does happen then we use local (private) temp segments will be allocated to store the excess data and when an in-memory cursor duration temp table is queried it will query both the memory and the private temp segments to return the data.

Will all my queries use this new feature?

Our internal algorithms determine when and how this new feature is used. At the moment only serial queries that make multiple passes over a data set or queries that need to materialize intermediate results will use this feature. Don’t be concerned if your queries do not end up using these new in-memory cursor duration temp tables. There is nothing you can do to force their use within a query. The point of this post is to simply make you aware of a new term that could, potentially, appear in your explain plans. Obviously, going forward we will explore the possibility of expanding the scope of this feature to cover other types of queries.Do I need to license database in-memory option?

No. There is no need to license the costed Database In-Memory option. Of course, if you are using the Exadata and Database Cloud services then really useful analytical options such as advanced analytics (data mining, machine learning), spatial and graph analytics and in-memory are included in most of the service prices. If that isn’t good enough reason to move to the Oracle Cloud then I don’t know what is!Where does the memory come from?

In broad general terms the memory used by In-Memory “cursor duration” temporary tables comes from the PGA pool. Does this mean you might need to increase the size of your PGA memory area? As usual, “it depends…..” on a lot of different factors including whether you have a lot of queries today that use cursor duration temporary tables and which are likely to switch over to using the new in-memory cursor duration temporary tables. All I can say is: monitor your usage of PGA and determine if you need to increase the size of your PGA because you are running out of resources. Of course, if a query cannot allocate sufficient memory to use in-memory cursor duration” temporary tables it will simply revert back to using the pre-12.2 cursor duration” temporary tables.That’s the background stuff all covered so now we can look at a couple of SQL code examples to see how this all works in practice.

Sample Schema

Let’s look at a simple query using the sales history sample schema (to download this sample schema goto the database downloads page on OTN, find your operating system and click on the “See All” link, then scroll down until you find the download link for the “Oracle Database xxxxx Examples”. Finally, follow the installation instructions in the Oracle Documentation to install the SH schema into your database instance).Alternatively you can access the sales history schema using our new web-based LiveSQL. You will need to create an account if you don’t already have one.

Example: GROUP BY with GROUPING SETS

What we want to find is the total revenue for each product and promotion during the period 01-Jan-2000 and 31-Dec-2000 along with the total sales in each channel. We can do this by using the GROUP BY GROUPING SETS feature (a very big topic for another day). Here’s the query we need to run:

SELECT /*+MONITOR */

p.prod_category,

x.promo_id,

c.channel_class,

t.calendar_quarter_desc,

s.time_id,

SUM(s.amount_sold)

FROM sales s, products p, promotions x, channels c, times t

WHERE s.time_id

BETWEEN to_date('1998-01-01', 'YYYY-MM-DD')

AND to_date('2000-12-31', 'YYYY-MM-DD')

AND p.prod_id = s.prod_id

AND x.promo_id = s.promo_id

AND c.channel_id = s.channel_id

AND t.time_id = s.time_id

GROUP BY GROUPING SETS

((p.prod_category, x.promo_id),

(c.channel_class, t.calendar_quarter_desc),

(p.prod_category, t.calendar_quarter_desc),

(s.time_id, t.calendar_quarter_desc))

ORDER BY 1,2,3,4,5

Just to add some additional functionality to this example I am going to include a hint for parallel query and a hint that will allow me to access the real-time SQL monitoring within SQLDeveloper - for more information about this really great feature read this blog post by ThatJeffSmith: On Real-Time SQL Monitoring and the /*+MONITOR*/ Hint.The examples below are not going to showing huge performance gains simply because I am using a very small data set (the sales history sample schema) and I am running the queries in two separate VirtualBox machines on my laptop and the Big Data Lite image is also running a lot of other features, such as a complete Hadoop environment. Therefore, you just need to stay focused on the changes to I/O in the examples below.

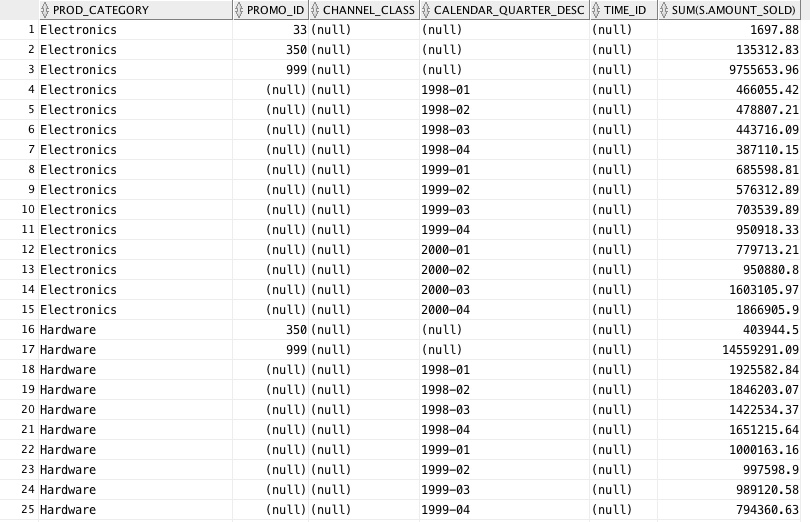

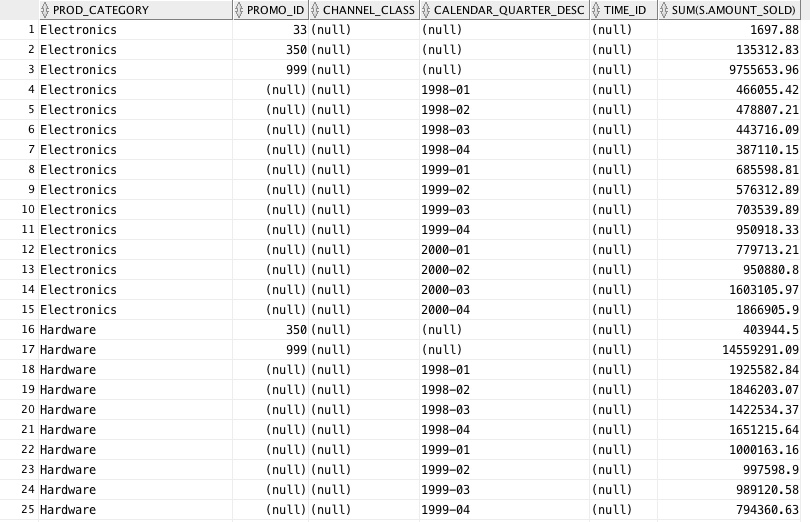

Part A, Pre 12-2: using cursor duration temp tables

Using the GROUPING SETS feature we will create totals for each specific combination of product category, promo_id, channel class along with quarters and months. The output is quite long because there are quite a few permutations of dimension members even in the sales history schema, so here is first block of rows returned into SQL Developer so you can get a sense of the structure of the data set that is returned. This query is running in a 12.1 Database (I am actually using one of the current release of the Big Data Lite VirtualBox image that includes Database 12c Release 1). I have truncated the output but you can see most of the results for the first GROUPING SET of prod_id and promo_id. The query does return a lot more rows: 1,205 rows.

The monitoring report in SQL Developer for the above query looks like this:

We can see that there are a number of distinct blocks of work but at the top of the plan we can see the TEMP TABLE TRANSFORMATION reference followed by LOAD AS SELECT with no further keywords, which is expected because we are not using 12.2 to run this query. About half-way down the report you can see the additional LOAD AS SELECT statements against the temp table containing the base level information we need to create the various total combinations within the GROUPING SETS clause.

If you want more information the temp table transformation step then there is an excellent post on the optimizer blog: https://blogs.oracle.com/optimizer/entry/star_transformation. From the plan below you can see that our temp table is then reused during the rest of the query where we reuse the temp table to construct the various totals for each combination of dimension members.

We can see that we are incurring I/O during each phase of the query: we are making 684 I/O requests and 172.6MB of I/O Bytes. Given that I am using two VMs running at the same time I don’t see much point in focusing on the actual execution time. So that’s our plan running in Database 12c Release 1.

Part B, 12.2: using in-memory cursor duration temp tables

Now let’s switch over to using our 12.2 database - I am using the latest developer VirtualBox image that is posted on OTN. Using this evironment we can see that if we re-run our query the result of the query , in terms of rows returned, is the same which is always great news.

Let’s take a look at the monitoring report for the same query now running in Database 12c Release 2:

The first to notice when comparing the two monitoring plans is that we have significantly reduced the amount of I/O in 12.2. In 12.1 our relatively simple grouping set query generations 684 I/O requests and 172.6MB of I/O. Compare that with the data in the monitoring report for the same query running in 12-2 - 40 I/O requests and 9.9MB of I/O. This means that we have managed to improve the overall efficiency of our query by simply upgrading to 12.2.

Obviously your mileage will vary according to the details of the query you are executing but that is a nice resource efficiency and performance boost that has required zero code changes and it’s completely FREE!.

In summary, with this GROUPING SET example we have reduced the amount of I/O and the number of I/O requests through the use of in-memory cursor duration temp tables. As with the previous report, you will see continual references to “LOAD AS SELECT”, however, in 12.2 there is an additional set of keywords which identify the use of the new in-memory cursor duration temp tables:

In the bottom half of the report you should notice that the above statement covers two additional plan lines HASH (GROUP BY) and TABLE ACCESS (FULL) which reference the temp table object, however, there are no I/O operations - which confirms the use of in-memory cursor duration temp tables.LOAD AS SELECT ((CURSOR DURATION MEMORY))

Summary

This post has covered just one of the many new SQL performance enhancements that we incorporated into Database 12c Release 2. I covered the most important features in my presentation at last year’s OpenWorld. A lot of these enhancements, including In-Memory “cursor duration” temporary tables, are completely automatic, i.e. transparent to the calling app/query and they are enabled by default because who doesn’t want their queries running faster with zero code changes? Within this release of 12.2 we are limiting their use to just serial queries of the types listed at the start of the post.Just to be absolutely clear - there are no hints or parameters you can set to force the use of In-Memory “cursor duration” temporary tables. Our internal algorithms will determine if this feature is used within your query. If In-Memory “cursor duration” temporary tables are used then you will see the following lines in your explain plans: LOAD AS SELECT (CURSOR DURATION MEMORY)

As I have outlined above, there are definite efficiency benefits to be gained from using this feature due to the reduction in I/O which should also improve overall query performance- although your mileage will vary depending on your particular environment! If you would like to share your experiences of using this new feature then please contact me via email (keith.laker@oracle.com).

Comments

Post a Comment