Sessionization with 12c SQL pattern matching is super fast

To help us evaluate our new 12c pattern matching feature our development team ran a series of performance tests using the TPC-H schema. The data set contained approximately seven years of data which consumed about 1TB of space. As part of the tests we ran two different searches:

This creates an output result set that delivers a simplified sessionization data set as shown here:

The SQL to create the initial result set is as follows:

However, to get business value from this derived data set we need to do some additional processing. Typically, with this kind of analysis the business value of within the data emerges only after aggregation which in this case needs to by session. We need to reduce the data set to a single tuple, or row, per session with some derived attributes

Here is code based on using the PREV() function to check the current record against the previous record:

The output would look something like this:

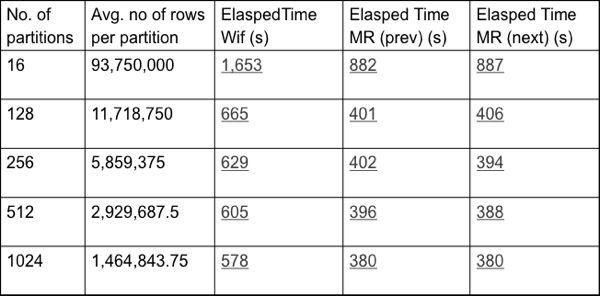

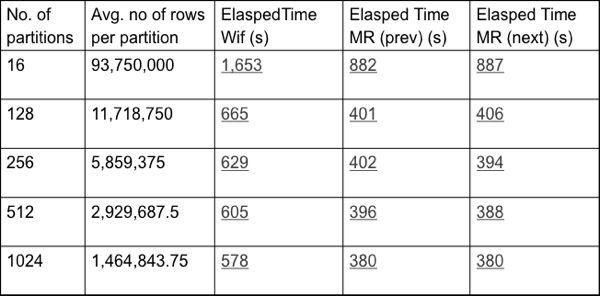

The performance results for these three approaches are shown below: column 3 shows the SQL using window functions and columns 4 & 5 show the performance figures for MATCH_RECOGNIZE based on using either PREV() or NEXT(). As you can see from the results, it is worth investigating how you create your comparison test in terms of looking forward or backwards to do the comparison test because there can be differences in performance. In general the 12c MATCH_RECOGNIZE code is between 1.5x and 1.9x faster compared to using window functions.

the first search was based on a sessionization pattern using the orders table and this was compared to using a window functionLet's examine these two use cases in more detail.

the second search was based on a W-pattern using the line-item table and this was also compared to using a window function.

Sessionization

To create the first part of the sessionization workflow we took the original source data and used the USER_ID as the PARTITION BY key and the timestamp for the ORDER BY clause. In this example we defined a session as a sequence of one or more events with the same partition key (USER_ID) where the gap between the timestamps is less than 10 seconds - obviously the figure for the gap is completely arbitrary and could be set to any number as required. The objective for this first step is to detect the various sessions and assign a surrogate session id to each session within each partition (USER_ID).This creates an output result set that delivers a simplified sessionization data set as shown here:

The SQL to create the initial result set is as follows:

SELECT user_id, session_id start_time, no_of_events, duration

FROM Events MATCH_RECOGNIZE

(PARTITION BY User_ID ORDER BY Time_Stamp

MEASURES match_number() session_id,

count(*) as no_of_events,

first(time_stamp) start_time,

last(time_stamp) - first(time_stamp) duration

ONE ROW PER MATCH

PATTERN (b s*)

DEFINE

s as (s.Time_Stamp - prev(s.Time_Stamp) <= 10)

)

;

as a comparison for how to achieve the above using analytical window functionsCREATE VIEW Sessionized_Events as SELECT Time_Stamp, User_ID, Sum(Session_Increment) over (partition by User_ID order by Time_Stampasc) Session_ID FROM ( SELECT Time_Stamp, User_ID, CASE WHEN (Time_Stamp - Lag(Time_Stamp) over (partition by User_ID order by Time_Stampasc)) < 10 THEN 0 ELSE 1 END Session_Increment FROM Events) ;SELECT User_ID, Min(Time_Stamp) Start_Time, Count(*) No_Of_Events, Max(Time_Stamp) -Min(Time_Stamp) Duration FROM Sessionized_Events GROUP BY User_ID, Session_ID;

As you can see the non-12c approach is a little more complex to understand but it produces the same output - i.e. our initial sessionized data set.

However, to get business value from this derived data set we need to do some additional processing. Typically, with this kind of analysis the business value of within the data emerges only after aggregation which in this case needs to by session. We need to reduce the data set to a single tuple, or row, per session with some derived attributes

- Within-partition Session_ID

- Number of events in a session

- Total duration

Here is code based on using the PREV() function to check the current record against the previous record:

select count(*)

from ( select /* mr_sessionize_prev */ *

from

( select o_pbykey, session_id, start_time, no_of_events, duration

from orders_v MATCH_RECOGNIZE

(

PARTITION BY o_pbykey

ORDER BY O_custkey, O_Orderdate

MEASURES match_number() session_id, count(*) as no_of_events,

first(o_orderdate) start_time,

last(o_orderdate) - first(o_orderdate) duration

PATTERN (b s*)

DEFINE s as (s.O_Orderdate - prev(O_Orderdate) <= 100)

)

)

where No_Of_Events >= 20

);

Here is code based on using the NEXT() function to check the current record against the next record:

select count(*)

from ( select /* mr_sessionize_prev */ *

from

( select o_pbykey, session_id, start_time, no_of_events, duration

from orders_v MATCH_RECOGNIZE

(

PARTITION BY o_pbykey

ORDER BY O_custkey, O_Orderdate

MEASURES match_number() session_id, count(*) as no_of_events,

first(o_orderdate) start_time,

last(o_orderdate) - first(o_orderdate) duration

PATTERN (s* e)

DEFINE s as (next(s.O_Orderdate) - s.O_Orderdate <= 100)

)

)

where No_Of_Events >= 20

Finally we can compare the 12c MATCH_RECOGNIZE code to the typical 11g code using window functions (which in my opinion is a lot more complex):

select count(*)

from (

select /* wf */ *

from (select O_pbykey, Session_ID, min(O_Orderdate) Start_Time,

count(*) No_Of_Events,

(max(O_Orderdate) - Min(O_Orderdate)) Duration

from ( select O_Orderdate, O_Custkey, o_pbykey,

sum(Session_Increment)

over(partition by o_pbykey order by O_custkey, O_Orderdate) Session_ID

from ( select O_Custkey, O_Orderdate, o_pbykey,

case when (O_Orderdate –

Lag(O_Orderdate)

over(partition by o_pbykey

order by O_custkey, O_Orderdate)) <= 100 -- Threshold

then 0 else 1 end Session_Increment

from orders_v

)

)

group by o_pbykey, Session_ID

)

where No_Of_Events >= 20

The output would look something like this:

The performance results for these three approaches are shown below: column 3 shows the SQL using window functions and columns 4 & 5 show the performance figures for MATCH_RECOGNIZE based on using either PREV() or NEXT(). As you can see from the results, it is worth investigating how you create your comparison test in terms of looking forward or backwards to do the comparison test because there can be differences in performance. In general the 12c MATCH_RECOGNIZE code is between 1.5x and 1.9x faster compared to using window functions.

Comments

Post a Comment