Analyzing our Big Data Lite movie app clickstream data

Since last year's OpenWorld I have posted quite a few articles on our new 12c pattern matching feature:

- OLL Live webcast - Using SQL for Pattern Matching in Oracle Database

- OOW content for Pattern Matching….

- SQL analytical mash-ups deliver real-time WOW! for big data

- OTN Virtual Developer Day content now available on-demand

- Sessionization with 12c SQL pattern matching is super fast

- Oracle Technology Network's FREE Virtual Database Developer Day in EMEA

To date most of my online demos and tutorials have used a very simple data set consisting of a single table with 60 rows of fictitious trade data. Over the last few months I have been searching around for a slightly bigger and more challenging data set to use. Fortunately, our BIg Data PMs (led by Marty Gubar) have put together a very rich big data schema as part of their recently launched Big Data Virtual Machine that you can download from our OTN web page, see here: http://www.oracle.com/technetwork/database/bigdata-appliance/oracle-bigdatalite-2104726.html.

The data set is based around an online customer movie application. Here is a little background…

Oracle MoviePlex Demo Application

Oracle MoviePlex is a fictitious on-line movie streaming company. Customers log into Oracle MoviePlex where they are presented with a targeted list of movies based on their past viewing behavior. Because of this personalized experience and reliable and fast performance, customers spend a lot of money with the company and it has become extremely profitable.

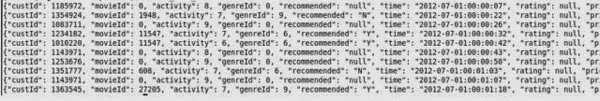

As the users watch movies, search for their favorite actors, rate their movies the system records all the clicks in a log file. The contents of that log file looks like this:

In its raw state this information is not very helpful. It needs a lot of processing to convert the various data points into usable information. In many cases companies have been turning to Hadoop and its related Java-based programming language MapReduce to process and convert these types of files into usable information. Most business users will want to summarise this information by customer and/or movie and then merge this information with other data sets. So how can we make access to and analysis of this type of data much easier? As part of this post I am going to compare the Hadoop-Java-MapReduce approach with an alternative approach using 12c SQL. My objective is not to determine which is solution is the best because each approach has its own camp of supporters. Once we have reviewed the two solutions I will put everything into context and make some recommendations…..so let's bring on the code!

Sessionization using Java

Accessing the Avro file

At the moment In the context of Big Data, everything seem to revolve around Hadoop, MapReduce and Java. It is quite natural for a big data developer to extend their basic map reduce processing to include more complicated requirements. In the case of our movie demo there is a lot of processing that needs to be done using the native features of Hadoop to collect and process the weblog file being generated by our application. There is an excellent video prepared by Marty Gubar (Director of Product Management for Big Data) which explains this process. This is part four of a six-part series that explains the movieplex demo: Part 4. Turn Clicks into Value - Flume & Hive. The movie demo lasts about 5 mins and you can watch here: https://www.youtube.com/watch?v=IwrjJUoUwXY.

The steps shown in the movie explain how to access the avro file and then how to clean the data to provide some interesting additional metrics.

Calculating the sessionization data

Creating the sessionization analysis is a little more complicated. In fact, it requires 370 lines of Java code. Here is the Java code we created for doing the sessionization analysis (the code window is scrollable so you can review the very long code sample):

The actual logic for the sessionization analysis is about 100 lines of code as shown here (at s before, the code window is scrollable so you can review the very long code sample):

As you can see from the code listing this requires a strong knowledge of Java and with 370 lines of code, if we decide to change the details of the pattern that we are searching for it is going to be a lengthy process to make the required changes. Making anything changes to the code to reflect changing business requirements is definitely going to be beyond the skills of your average business analyst and/or data scientists and this might negatively impact the level of project agility.

Making life easier and richer with SQL

My personal view is that sessionization analysis is quite a sophisticated requirement and is best achieved using SQL because the requirements and parameters for the analysis will naturally evolve over time as new questions are triggered by existing answers. Therefore, you need an agile approach to analytics that can deal with constant changing requirements during the data discovery phase.

Accessing the Avro file

First step is to create a DIRECTORY object to access the raw data file:

CREATE DIRECTORY session_file_dir AS '/home/oracle/applog';

GRANT READ, WRTIE ON DIRECTORY session_file_dir to pmuser;

Next I created an external table over the data file. Notice that in the avro file, each key column has an identifier and a value.

{"custId":1077225"movieId":100"genreId":8"time":"2011-07-30:22:55:32""recommended":"N""activity":6}

The objective for this external table was to keep the processing as simple as possible:

CREATE TABLE RAW_SESSION_DATA

(

CUST_ID VARCHAR2(20)

, MOVIE_ID VARCHAR2(20)

, GENRE_ID VARCHAR2(20)

, SESSION_DATE VARCHAR2(50)

, RECOMMENDED_ID VARCHAR2(20)

, ACTIVITY_ID VARCHAR2(20)

, RATING_ID VARCHAR2(20)

)

ORGANIZATION EXTERNAL

(

TYPE ORACLE_LOADER

DEFAULT DIRECTORY SESSION_FILE_DIR

ACCESS PARAMETERS

(RECORDS DELIMITED BY NEWLINE

NOBADFILE

NODISCARDFILE

NOLOGFILE

FIELDS TERMINATED BY ','

OPTIONALLY ENCLOSED BY "'"

MISSING FIELD VALUES ARE NULL)

LOCATION (SESSION_FILE_DIR: 'movieapp_30months.log')

)

REJECT LIMIT 1;ALTER TABLE RAW_SESSION_DATA

PROJECT COLUMN REFERENCED;

Cleaning the data

Now the next stage is to clean the data and remove the column identifiers such as custId, movieId, genreId etc. To do this processing I simply used the SQL SUBSTR() function. At the same time I decided to breakout the activity ID column to create unique columns for each type of activity. This approach is useful if you are going to be using the data as part of a data mining project because in many cases it useful to uniquely identify specific attributes. In many cases you will want to create a fact table from this process but in this case I have created a view while I do some basic prototyping:CREATE OR REPLACE VIEW VW_SESSION_DATA AS SELECT

SUBSTR(CUST_ID,11) AS CUST_ID

, CASE SUBSTR(MOVIE_ID,11) WHEN 'null' THEN null ELSE SUBSTR(MOVIE_ID,11) END AS MOVIE_ID

, CASE SUBSTR(GENRE_ID,11) WHEN 'null' THEN null ELSE SUBSTR(GENRE_ID,11) END AS GENRE_ID

, TO_DATE(SUBSTR(SESSION_DATE, 9,19), 'YYYY-MM-DD HH24:MI:SS') AS SESS_DATE

, CASE SUBSTR(RECOMMENDED_ID,15) WHEN 'null' THEN null ELSE SUBSTR(RECOMMENDED_ID,16,1) END AS REC_ID , (CASE SUBSTR(ACTIVITY_ID,12,2) WHEN '1' THEN '1' WHEN '2}' THEN '2' WHEN '3}' THEN '3' WHEN '4}' THEN '4' WHEN '5}' THEN '5' WHEN '6}' THEN '6' WHEN '7}' THEN '5' WHEN '8}' THEN '8' WHEN '9}' THEN '9' WHEN '10' THEN '10' WHEN '11' THEN '11' ELSE null END) AS ACTIVITY_ID , CASE SUBSTR(ACTIVITY_ID,12) WHEN 'null' THEN null ELSE SUBSTR(ACTIVITY_ID,12,1) END as ACTIVITY_ID

, CASE SUBSTR(RATING_ID,9) WHEN 'null' THEN null ELSE SUBSTR(RATING_ID,10,1) END as RATING_ID

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '1' THEN 'Y' END as act_rate

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '2' THEN 'Y' END as act_complete

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '3' THEN 'Y' END as act_pause

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '4' THEN 'Y' END as act_start

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '5' THEN 'Y' END as act_browse

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '6' THEN 'Y' END as act_list

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '7' THEN 'Y' END as act_search

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '8' THEN 'Y' END as act_login

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '9' THEN 'Y' END as act_logout

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '10' THEN 'Y' END as act_incomplete

, case SUBSTR(ACTIVITY_ID,12,1) WHEN '11' THEN 'Y' END as act_purchase

FROM RAW_SESSION_DATA;

Running a query against this view generates our basic data set that we can then feed into our MATCH_RECOGNIZE clause to create the sessionization result set.

Is there a better, simpler way to deal with the avro file? Well yes there is. As part of the Database 12c release programme we will be adding in-database support for JSON data. This will allow us to take the raw avro file and access stand query it directly from within the database without the need to go through the complicated cleaning process! At the moment we have not made any collateral (PPTs etc) on this feature publicly available so I can't provide you with any supporting links that will give you more information. However, once this feature is released I will revisit this little demo to explore how the new JSON feature can be used along side SQL pattern matching.

Calculating the sessionization data

The code to calculate the sessionization information is as follows:

SELECT *

FROM vwsession_data

MATCH_RECOGNIZE

(PARTITION BY cust_id ORDER BY sess_date

MEASURES match_number() session_id,

COUNT(*) no_of_events,

FIRST(sess_date) start_date,

TO_CHAR(FIRST(sess_date), 'hh24:mi:ss') start_time,

LAST(sess_date) end_date,

TO_CHAR(LAST(sess_date), 'hh24:mi:ss') end_time,

TO_CHAR(ROUND(TO_NUMBER(LAST(sess_date) - FIRST(sess_date)) * 1440), '999,999') duration,

LAST(activity_id) last_act_id,

COUNT(act_rate) act_rate,

COUNT(act_complete) act_complete,

COUNT(act_pause) act_pause,

COUNT(act_start) act_start,

COUNT(act_browse) act_browse,

COUNT(t(act_list) act_list,

COUNT(act_search) act_search,

COUNT(act_login) act_login,

COUNT(act_logout) act_logout,

COUNT(act_incomplete) act_incomplete,

COUNT(act_purchase) act_purchase

ONE ROW PER MATCH

PATTERN (strt s+)

DEFINE

s as (round(to_number(sess_date - prev(sess_date)) * 1440) <= 5)

);

This statement uses many of the MATCH_RECOGNIZE features that I have discussed in previous posts. In this specific example I have decided to set the duration between events within a session as 5 minutes. That means if the user does nothing for about 5 minutes then I will assume that a new session has started even if the user has not logged out.

Beyond the basic sessionization model there is another interesting pattern that we can search for within our data set. If a user starts watching a movie then we might not get another event being logged for over two hours. Therefore, we could look for instances where the time between events is less than 150 minutes (assuming most movies last around 2 hours) and there is at least one (or more) "start" event is logged and at least one (or more) "complete" event is logged,

PATTERN (strt s* f+ c+)

DEFINE

f as act_start = 'Y',

c as act_complete = 'Y',

s as (round(to_number(sess_date - prev(sess_date))*1440) <= 150)

The output from this query is shown below:

Looking at the results what really jumps out is the level of interaction on the site before, during and after the user has watched a movie. Many of the records that are returned show users searching our movie database, going to their lists of favourite movies and rating the movies they have just watched. All this implies that if we can get customers to begin watching a movie there is a huge knock-on effect in terms of how long they remain on the site and the number of "events" they create while on the site. This is the sort of information that is useful to marketing and campaign management teams.

A different view: Calculating the sessionization data for each movie

While developing the above analysis I began to wonder about analysing the data not from a customer perspective but from the point of view of each movie. This would allow me to look at the session activity over time for each movie and understand the usage patterns across movies and genres. For example, are there certain movies or types of movies that are more or less likely to be rated. Is the process of watching a movie the same across all movies in terms of the events that are registered during a session? There are all sorts of new avenues of analysis that could be created from looking at the data in this way.

So how easy would it be to change the focus of the analysis to movies? Well the code took me approximately 30 seconds to write - actually cut & paste from the customer sessionization example, switch the customer id for the movie id and finally removed a few fields. Here is the new code:

SELECT *

FROM SESSION_DATA td

MATCH_RECOGNIZE

(PARTITION BY movie_id ORDER BY sess_date

MEASURES COUNT(*) no_of_events,

FIRST(sess_date) start_date,

TO_CHAR(first(sess_date), 'hh24:mi:ss') start_time,

LAST(sess_date) end_date,

TO_CHAR(LAST(sess_date), 'hh24:mi:ss') end_time,

TO_CHAR(ROUND(TO_NUMBER(LAST(sess_date) - FIRST(sess_date)) * 1440), '999,999') duration,

MIN(activity_id) act_id,

MAX(activity_id) last_act_id,

COUNT(act_rate) act_rate,

COUNT(act_complete) act_complete,

COUNT(act_pause) act_pause,

COUNT(act_start) act_start,

COUNT(act_browse) act_browse,

COUNT(act_list) act_list,

COUNT(act_search) act_search,

COUNT(act_login) act_login,

COUNT(act_logout) act_logout,

COUNT(act_incomplete) act_incomplete,

COUNT(act_purchase) act_purchase

ONE ROW PER MATCH

PATTERN (strt s*)

DEFINE

s as (ROUND(TO_NUMBER(sess_date - PREV(sess_date))*1440) <= 120)

) MR;

The speed at which you can create these additional/alternative result sets is really great! It is now relatively easy to continue this discovery process by analysing the information by movie genre or any of the other related attributes.

Summary

The main take-away from this post is that (hopefully) I shown how easy it is to use SQL for sessionization analysis. Firstly in terms of creating the framework to support the normal "sessionization" transformation process: taking the source log file, extracting the data points and then grouping the information by user. The great news is that processing of the source file will be greatly simplified when the JSON feature is released because it will be possible to query the log file in a much simpler way to extract the required data points. Secondly, SQL provides a much simpler and easier way to support the evolutionary of the discovery process. Changing the focus from customer analysis to movie analysis is both quick and easy. I am not convinced that a Java-based approach to data discovery can offer the same level of simplicity and agility but I will let you be the judge of that last statement….

Technorati Tags: Analytics, Big Data, Data Warehousing, Database 12c, Oracle Database 12c, Pattern Matching, SQL Analytics

Comments

Post a Comment