2013 - The year of In-Database Analytics!

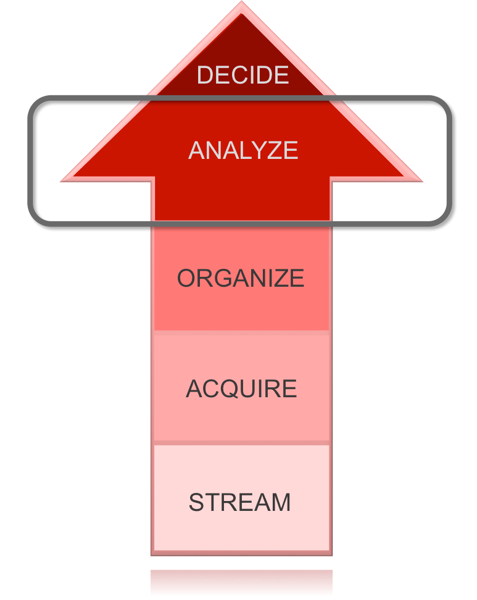

Many of our customers spent 2012 kick-starting big data projects. Based on the number of analyst reports, news articles and general chatter on the web I think it is fair to say that 2012 was the Year of Big Data. To help our customers plan and coordinate their big data projects we developed a very simple high level five step workflow for big data (Stream-Acquire-Organize-Analyze-Decide) as shown here:

Many of the projects that our customers stated in 2012 have at least completed the first three steps (with some having completed stages 4 and 5 and now looping back to stage 1 to kick-start the next round of data enrichment and knowledge discovery):

1) STREAM - all the relevant data streams have been identified and the APIs coded to collect the data on a regular basis

2) ACQUIRE - these data streams are increasing landing on our Big Data Appliance and that is great news!

3) ORGANIZE - many project teams have completed the first round of light-touch transformations and made their new data sets available for analysis. Typically this is being done using R, Java and our Big Data Connectors

We can see that during 2012 an awful lot was achieved. For 2013 we are going to see everyone focusing on the ANALYZE phase of the workflow and my prediction for 2013 is that this will be the year of "Big Data Analytics". The good news for Oracle customers is that you do not have to wait until 2015 to analyse your Hadoop data.

The most important thing is that customers must learn the key lesson from those old days of data warehousing There are two ways to manage ANALYZE the analyse phase:

The danger for many organizations is that in delivering this type solution to the business they simply create “analytic silos” that are designed to resolve specific business problems. These analytic silos create unnecessary cost, they increase complexity and cause increased levels of data movement across the network as data is pushed in and results pulled out from each silo. This continuous movement of data creates time delays in being able to view results because business questions usually require multiple levels of analysis using lots of different types of analytical functions. The longer it takes to arrive at an answer the greater the chance you will make the wrong business decisions or completely miss out on an significant opportunity.

As before each of these specialised platforms have their own proprietary engine, tools and languages and this makes it difficult to kick-start and grow a project. Broadening the analysis can be complex and in many cases impossible. There has to be a better way to analyse data!

2) Taking the analysis to the data with In-Database Analytics

The end game for all big data projects (whether they realise it or not when the project first starts out) is to deliver an environment that offers the following: a broad range of analytical tools that can analyse all types of data and be accessed using existing skills and tools. What you need is a single place to run your analysis against all your data so your business users can easily apply layer after layer of analysis. This ensures that the process of transforming data into insight delivers the right data at the right time, to the right person and on the right device.

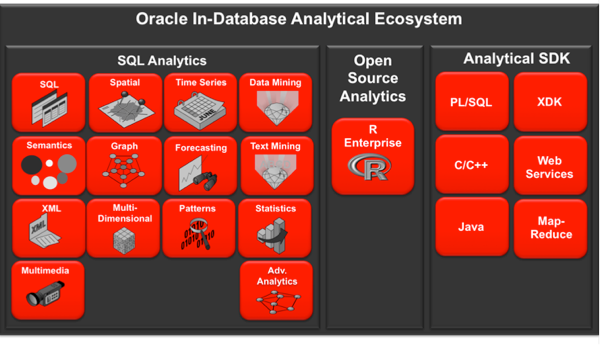

To get the most from your data streams it is important that your analysis has the ability to incorporate the broadest range of analytical functions and that these can be applied to all your data steams with results delivered in a real-time. The ANALZYZE phase in our workflow leverages the Oracle Database. The Oracle Database started with a rich set of built-in SQL analytic features that allowed developers to process data directly inside the database using standard SQL syntax rather than having to move data to a separate platform, process it using a proprietary language and then return the results to the database. Oracle's approach to analytics is to take the "analysis to the data", i.e. Oracle provides in-database analytics. In-database analytics offers some important advantages over the three other options outlined above:

Why is in-database analytics so important?

By making all the data available from inside the data warehouse and moving the analytics to the data Oracle is able to provide a wide range of analytical functions and features and ensure data governance and security is enforced at every stage of the analytic process, while at the same time providing timely delivery of analytical results. Oracle’s single integrated analytic platform offers many advantages.

To help explain those advantages I am busy writing a whitepaper on precisely this topic and it should be released shortly. The whitepaper will explore the points I have highlighted above and will explain how Oracle can help you transform all your data into real actionable insight. When you change the way you analyse your data by moving the algorithms to the data rather than the traditional approach of extracting the data and moving it to the algorithms for analysis, it CHANGES EVERYTHING, including your business. You can know more about your products, your operations and your customers by having that insight delivered at the right time, to the right person and on the right device.

As part of the paper I will outline how some of Oracle’s industry leading customers are already using our in-database analytics today to help them understand more about their products, operations and customers and how many of them are layering different types of analysis one on top of the other, something that we believe is only possible with Oracle: if you want to enhance spatial analytics with data mining or drive multi-dimensional analysis using data mining or use semantics to help manage your data warehouse metadata then Oracle is the answer. There is a better way to do analysis in 2013

Which in-database analytical features do you use?

I have started a poll on LinkedIn to capture information about which in-database features people use the most. If you want to vote in the poll then click [here] - if you are not a member of the group then click the "request to join" button and I will approve you as soon as I get the email alert from LinkedIn

As soon as the whitepaper is ready I will post a note on my blog.

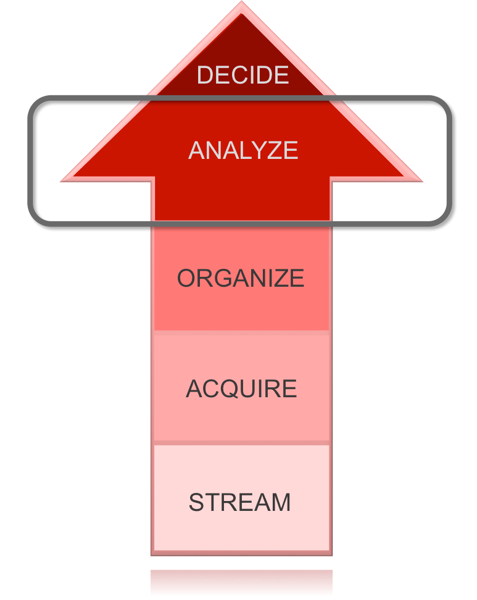

Many of the projects that our customers stated in 2012 have at least completed the first three steps (with some having completed stages 4 and 5 and now looping back to stage 1 to kick-start the next round of data enrichment and knowledge discovery):

1) STREAM - all the relevant data streams have been identified and the APIs coded to collect the data on a regular basis

2) ACQUIRE - these data streams are increasing landing on our Big Data Appliance and that is great news!

3) ORGANIZE - many project teams have completed the first round of light-touch transformations and made their new data sets available for analysis. Typically this is being done using R, Java and our Big Data Connectors

We can see that during 2012 an awful lot was achieved. For 2013 we are going to see everyone focusing on the ANALYZE phase of the workflow and my prediction for 2013 is that this will be the year of "Big Data Analytics". The good news for Oracle customers is that you do not have to wait until 2015 to analyse your Hadoop data.

The most important thing is that customers must learn the key lesson from those old days of data warehousing There are two ways to manage ANALYZE the analyse phase:

- Use subject-specific specialised analytic engines and take the data to the analysis

- Use an analytically rich database and take the analysis to the data

The danger for many organizations is that in delivering this type solution to the business they simply create “analytic silos” that are designed to resolve specific business problems. These analytic silos create unnecessary cost, they increase complexity and cause increased levels of data movement across the network as data is pushed in and results pulled out from each silo. This continuous movement of data creates time delays in being able to view results because business questions usually require multiple levels of analysis using lots of different types of analytical functions. The longer it takes to arrive at an answer the greater the chance you will make the wrong business decisions or completely miss out on an significant opportunity.

As before each of these specialised platforms have their own proprietary engine, tools and languages and this makes it difficult to kick-start and grow a project. Broadening the analysis can be complex and in many cases impossible. There has to be a better way to analyse data!

2) Taking the analysis to the data with In-Database Analytics

The end game for all big data projects (whether they realise it or not when the project first starts out) is to deliver an environment that offers the following: a broad range of analytical tools that can analyse all types of data and be accessed using existing skills and tools. What you need is a single place to run your analysis against all your data so your business users can easily apply layer after layer of analysis. This ensures that the process of transforming data into insight delivers the right data at the right time, to the right person and on the right device.

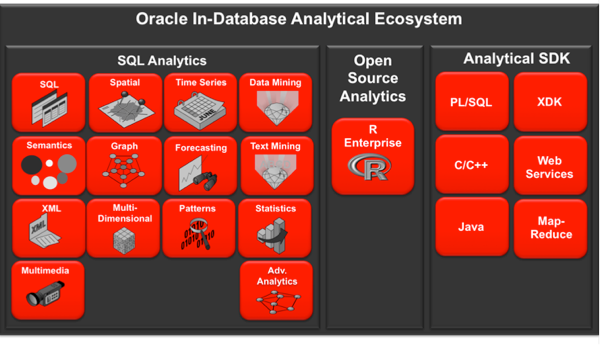

To get the most from your data streams it is important that your analysis has the ability to incorporate the broadest range of analytical functions and that these can be applied to all your data steams with results delivered in a real-time. The ANALZYZE phase in our workflow leverages the Oracle Database. The Oracle Database started with a rich set of built-in SQL analytic features that allowed developers to process data directly inside the database using standard SQL syntax rather than having to move data to a separate platform, process it using a proprietary language and then return the results to the database. Oracle's approach to analytics is to take the "analysis to the data", i.e. Oracle provides in-database analytics. In-database analytics offers some important advantages over the three other options outlined above:

- Reduced latency - data can be analyzed in-place

- Reduced risk – a single set of data security policies can be applied across all types of analysis

- Increased reusability – all data types are accessible via SQL making it very easy to re-use analysis and analytical workflows across many tools: ETL, business intelligence reports and dashboards and operational applications

Why is in-database analytics so important?

By making all the data available from inside the data warehouse and moving the analytics to the data Oracle is able to provide a wide range of analytical functions and features and ensure data governance and security is enforced at every stage of the analytic process, while at the same time providing timely delivery of analytical results. Oracle’s single integrated analytic platform offers many advantages.

To help explain those advantages I am busy writing a whitepaper on precisely this topic and it should be released shortly. The whitepaper will explore the points I have highlighted above and will explain how Oracle can help you transform all your data into real actionable insight. When you change the way you analyse your data by moving the algorithms to the data rather than the traditional approach of extracting the data and moving it to the algorithms for analysis, it CHANGES EVERYTHING, including your business. You can know more about your products, your operations and your customers by having that insight delivered at the right time, to the right person and on the right device.

As part of the paper I will outline how some of Oracle’s industry leading customers are already using our in-database analytics today to help them understand more about their products, operations and customers and how many of them are layering different types of analysis one on top of the other, something that we believe is only possible with Oracle: if you want to enhance spatial analytics with data mining or drive multi-dimensional analysis using data mining or use semantics to help manage your data warehouse metadata then Oracle is the answer. There is a better way to do analysis in 2013

Which in-database analytical features do you use?

I have started a poll on LinkedIn to capture information about which in-database features people use the most. If you want to vote in the poll then click [here] - if you are not a member of the group then click the "request to join" button and I will approve you as soon as I get the email alert from LinkedIn

As soon as the whitepaper is ready I will post a note on my blog.

Comments

Post a Comment