Why SQL Part 2 - It has a powerful framework

In the first part (Why SQL is the natural language for data analysis) of this series of blog posts I explained why analytics is important to the business, how the volume of data along with the types of data is continuing to expand and why that makes it vital that you select the right language for data analysis. Many of us work with SQL every day and take for granted many of its unique features, its power, flexibility and the richness of the analytics. This familiarity with SQL means that sometimes we are a bit slow at preventing some of our projects investing in other big data languages such as MapReduce, Impala, Spark, Java and many of the new generation of SQL-like open source projects. While many of these new languages are considered “cool”, it is very easy to end up building new proprietary data silos or investing in a language that eventually is replaced by another open source project or eventually fails to deliver the required analytics.

One of the aims of this series of blog posts is to refresh your memory about why SQL has been so successful in the area of analytics. SQL has four unique features that make it perfect for data analysis:

- Powerful framework

- Transparent optimization

- Continuous evolution

- Standards based

The aim of this post is to explore the first of these features: powerful framework

The reason for collecting data is because it needs to be interrogated: events needs to be dissected, customers need to be profiled, product sales evaluated. This interrogation process is typically framed around a set of data rather than a single data point or row of data and mathematical rules have been created to provide a precise framework for this analytical process. The framework is “relational algebra” which was first described in 1970 by E.F. Codd while at IBM . It provides well-founded semantics for both modeling and querying a set of data.

Codd's framework established a series of basic principles that govern the construction and execution of a query and these can be summarized as follows:

- Projection

- Filter

- Join

- Aggregate

The ideas behind relational algebra (set theory) are not just limited to the points that will be covered in this particular section. These concepts have implications that cascade right across the other unique features. While the elements of this basic framework are constant the way that these elements are implemented within many of the current big data related SQL-like languages differ based on the approach adopted by each of the open source projects/languages.

Let’s explore each the four basic principles starting with “Projections”.

1. Projections

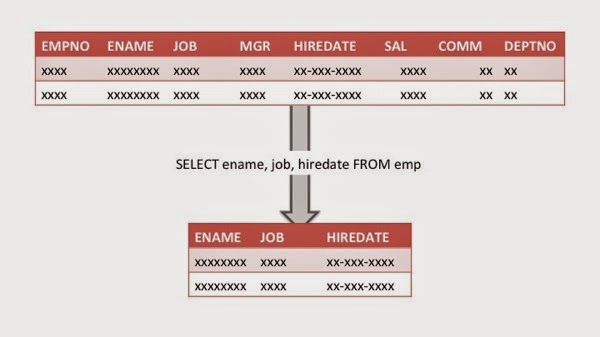

When reviewing the concept of a “set of data” in the context of SQL it is important to remember that each source set of data contains two separate sets: a column set and a row set. When interrogating a set of data the first step is to determine which columns within the set are of interest., projections of the total set of columns.. When a database system does projections, it is simply determining which columns are necessary for a given analysis, and discards the other columns from the analysis.

The Oracle Database has a comprehensive metadata layer that supports the discovery of columns across a wide range of data sets: relational tables/views, XML documents, JSON documents, spatial objects, image-style objects (BLOBs and CLOBs), semantic networks etc.

The first part of the SELECT clause is used to list the columns of interest within the set of data and this can include all the columns rather than simply a subset. The syntax for selecting all columns within a set, in this case using a table called EMP, is:

SELECT * FROM emp;

The Oracle Database uses its metadata layer to establish the list of all column names associated with the set and then internally expands the query statement to include all the relevant column names.

Alternatively the query can specify the required columns within the set such as:

SELECT ename, job, hiredate FROM emp;

This level of sophistication (both metadata and automatic statement expansion) is missing in many data manipulation languages and this requires developers to add additional bespoke code to cope with these basic requirements. SQL also supports the concept of extended projections. This is where new data columns are created within the result set using arithmetic operations. For example, taking the columns SAL and COMM it is possible to compute the rate of commision by dividing SAL by COMM to create a new column:

SELECT

ename,

sal,

comm,

comm/sal*100 AS comm_rate

FROM emp;

Oracle’s Big Data SQL feature extends this metadata model so that it can encompass data stored inside NoSQL databases, JSON documents, and files stored on HDFS. This comprehensive metadata layer makes it very easy for developers to create dynamic SQL statements containing column projections based around a wide range of data sources.

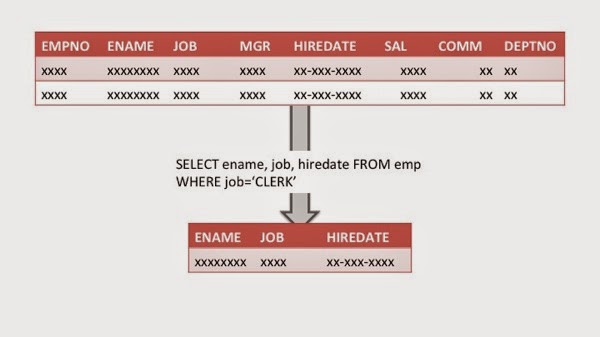

2. Filters

The next stage within the query framework is to specify the rows that are of interest. Since these cannot be identified in the same way as columns, using names, a different approach is used for row-based projections. This approach requires the use of filters – i.e. describing the attributes associated with row sets that are of interest. Within the SQL language row filtering is part of the WHERE clause syntax (these filters are also often called predicates in relational terminology).

The developer can define specific filtering criteria that rows must meet to be included in the result set. For example using the employee data set it is possible to extend our previous example to limit the set of rows returned by a query to just those associated with the job type ‘CLERK’ :

SELECT

empno,

ename,

job,

mgr,

hiredate,

sal,

comm,

deptno

FROM emp

WHERE job=”CLERK”;

Applying filters cannot only reduce the returned data set based on attributes describing the data – such as the job type above – it can also reduce the returned data set to an absolute or relative subset of the result set.

For business-driven queries this process of limiting rows is essential. A query can be structured to return a specified number or percent of rows starting with the first row after the offset. The offset allows for modification of typical questions, so that the question about highest-paid employees might skip the top ten employees and return only those from eleventh to twentieth place in the salary rankings. In a similar manner it is possible to query the employees data set by salary, skip the top ten employees and then return the top 10% of the remaining employees.

The SQL language supports a wide range of filtering comparison operators to identify specific rows:

|

While it is possible to filter rows based on values, for some types of application-driven queries this process of limiting rows can be extended. A query can be structured to return a specified number or percent of rows starting with the first row after the offset. For application developers this process of limiting rows using the SQL language is extremely flexible. For example, it can be used to aid testing or provide a pagination feature for passing data to a front-end visualization. The Oracle Database supports a number of techniques to help developers meet this requirement such as:

- FETCH FIRST N/N-PERCENT

- RANK

- ROW_NUMBER()

These features can be used within user interfaces to provide the first few rows of a data set for browsing. The SQL language has a very rich set of filtering techniques that are both simple to implement and to amend as requirements evolve over time.

3. Joins

Most query operations require the use of at least two data sets and this necessitates a “join” operation. At a simplistic level, a join is used to combine the fields within two or more data sets in a single query, based on common logical relationships between those various data sets. In our simple data example, suppose that there was a department data set in addition to the employee data set. A query might ‘join’ the department and employee data to return a single result combining data from both data sets. There are a number of ways that data sets can be joined:

- Inner - returns all rows where there is at least one match in both tables

- Full Outer - returns all rows from both tables, with matching rows from both sides where available. If there is no match, missing side will contain null.

- Outer left - returns all rows from left table, and matched rows from right table

- Outer right - returns all rows from right table, and matched rows from left table

- Cross - returns a Cartesian product of source sets, i.e. all rows from left table for each row in the right table

The process of defining and executing a SQL join is simple. The required type of join is implemented using the WHERE clause:

SELECT

d.deptno,

d.dname,

e.empno,

e.ename

FROM dept d

INNER JOIN emp e ON (e.deptno = d.deptno);

Note that there is nothing in this SQL query that describes the actual join process for the two data sets.

In many procedural languages the process of joining two sets of data can be complicated due to the need to explicitly code each join structure and join algorithm for each combination of columns across the various data sets. The level of complication builds as additional data sets are added to the query; different join operations are required for combinations of data sets such as taking into account missing values within data sets. The join order of multiple data sets has to be decided on and coded procedurally. It is very easy for the amount and complexity of code to increase dramatically as the number of data sets increases. This makes data processing validation and debugging very challenging. Once additional requirements are applied, such as aggregating specific data points, the level of code complexity within procedural languages can escalate very quickly.

For example when we join the two tables EMP and DEPT there could be departments that contains no employees. Using an inner join, the departments with zero employees are not be returned. If a query needs to return a count of the number of employees in every department, including the ‘empty’ departments containing zero employees, then an outer join is required as shown here:

SELECT

d.deptno,

count(e.empno)

FROM dept

LEFT OUTER JOIN emp e ON (e.deptno = d.deptno)

GROUP BY d.deptno ORDER BY d.deptno;

Hopefully, if you study the above code samples It is clear that SQL’s join code is easily readable – and code-able. The developer only specifies the semantic join condition and leaves the processing details - such as the order of the joins - to the SQL engine.

4. Aggregate

Aggregation is an important step in the process of analyzing data sets. Most operational, strategic and discovery-led queries rely on summarizing detailed level data. According to a TDWI report “Data Aggregation - Seven Key Criteria to an Effective Aggregation Solution” - up to 90% of all reports contain some level of aggregate information. Therefore, the ability to simply and efficiently aggregate data is a key requirement when selecting a language. If the aggregation process is correctly implanted it can generate significant performance benefits, which creates new opportunities for organizations to boost their overall analysis and reporting capabilities.

The types of aggregation applied to a data set can vary from simple counts to sums to moving averages to statistical analysis such as standard deviations. Therefore, the ability to simply and efficiently aggregate data is a key requirement for any analytical data language. Procedural languages, such as the Java based MapReduce, are more than capable of taking a data set and aggregating it, or reducing it, to provide a summary result set. The approach is adequate for most simple data discovery style queries, i.e. those that include basic counts. However, adding more complex aggregation requirements quickly increases the amount of code required to manage the computations. SQL has a rich set of data aggregation capabilities that make it easy to work on all rows in a set. For example, it is possible to sum all rows within a single column as follows:

SELECT

SUM(sal) AS total_salary

FROM emp;

It is easy to extend this query to accommodate new requirements such as a count of the number of employees and the average salary:

SELECT

COUNT(empno) AS no_of_employees,

SUM(sal) AS total_salary,

AVG(sal) As average_salary

FROM emp;

Taking these examples even further, it is a simple step to group rows into specific categories using the GROUP BY clause. The aggregate functions and GROUP BY clause group can be used to group the data and then apply the specific aggregate function(s) to count the number of employees, sum the salary and calculate the average salary in each department within a single query as shown below:

SELECT

deptno,

COUNT(empno) AS no_of_employees,

SUM(sal) AS total_salary,

AVG(sal) AS average_salary

FROM emp

GROUP BY deptno;

The ANSI SQL:2003 standard (more on this towards the end of this paper) extended the process of aggregating data by introducing the concept of analytic functions. These functions divide a data set into groups of rows called partitions making it is possible to calculate aggregates for each partition and for rows within each partition.

The use of additional keywords define the how analytical functions, such as average, sum, min, max etc., will be evaluated within each partition. Using the previous example, the statement below creates analytical reporting totals such as: total salary within each department, moving average salary within each department and average salary:

SELECT

d.deptno,

d.dname,

e.ename,

e.sal AS sal, SUM(e.sal) OVER (ORDER BY e.deptno)) AS dept_sal,

ROUND(AVG(e.sal) OVER (PARTITION BY e.deptno ORDER BY e.empno)) AS moving_avg_sal,

ROUND(AVG(e.sal) OVER (ORDER BY e.deptno)) AS avg_dept_sal

FROM dept d

LEFT OUTER JOIN emp e ON (e.deptno = d.deptno);

Compare the simplicity of the above SQL code for computing a moving average with the equivalent MapReduce code posted by Cloudera on Github as part of the blog post “Simple Moving Average, Secondary Sort, and MapReduce”. This “simple” example consists of twelve java program files to perform the moving average calculation, manage the job parameters and specify the framework for the associated workflow. Making changes to the calculations as business requirements evolve will be a significant challenge given the amount of code within this “simple” project.

SQL offers an additional ability to apply restrictions using columns that are returned as part of the result set. The HAVING clause filters results based on calculations in the SELECT clause and/or aggregations derived from the GROUP BY processing, in the same way that <br> filtering clause is applied, for example:

SELECT

deptno AS department,s

COUNT(empno) AS no_employees,

AVG(sal) AS avg_sal,

SUM(sal) AS tot_sal

FROM emp

GROUP BY deptno

HAVING avg(sal) > 2500;

Using SQL, developers and DBAs can leverage simple, convenient and efficient data aggregation techniques that require significantly less program code compared to using other analytical programming languages. The simplicity provided by SQL makes it easier and faster to construct, manage and maintain application code and incorporate new business requirements.

Summary

Hopefully within this blog post I have explained the primary operators that are part of relational algebra (set theory). Given that most of us use SQL every single day it is very easy to forget the power and sophistication that is going on under the covers. It all gets taken for granted until you find yourself working with a different language that takes a completely different framework.

Hopefully, it is clear that SQL offers a simple and efficient way to write queries compared to some of the other newer big data related languages that are emerging through the open source community. This concludes my look at the first of the four key reason as to why SQL is one of the most successful languages. In my next post, which will probably appear towards the end of this week, I will explore why SQL’s transparent optimization is such a critical feature.

Technorati Tags: Analytics, Big Data, Data Warehousing, Database 12c, Oracle Database 12c, SQL Analytics

Comments

Post a Comment